In cost-effectiveness analysis, we deal with uncertainty in our parameters by performing sensitivity analyses. In this article, I review how we can generate these distributions for common paramters in a cost-effectiveness analysis. You can view the article at my RPubs page.

Using Excel VBA macros to conduct one-way sensitivity analyses and tornado diagram

INTRODUCTION

In pharmacoeconomic analysis, we oftentimes incorporate data from external sources to inform the parameters of our economic models. It’s not uncommon for us to have some degree of uncertainty surrounding the values of our parameters. The size and influence of each uncertainty may influence our conclusions, which can significantly change based on a single parameter. For instance, if a new drug (Drug A) entering the market had an incremental cost-effectiveness ratio (ICER) that was greater than the stakeholder’s willingness-to-pay (WTP) threshold when compared to standard of care (Drug B), lowering Drug A’s price may move the ICER to below the WTP threshold (Figure 1). This could change the conclusion of the study from “Drug A is not cost effective compared to Drug B,” to “Drug A is cost effective compared to Drug B.” Of course, this depends on where the WTP threshold is located on the cost-effectiveness plane, but it highlights the importance of understanding the influence of a single parameter to a pharmacoeconomic model’s conclusion. This type of analysis to understand the influence of a single parameter on the model’s conclusion can be illustrated using a one-way sensitivity analysis.

Figure 1. Example of the incremental cost-effectiveness ratio (ICER) changing due to a change in the price of Drug A.

Multiple one-way sensitivity analyses can be performed and arranged in a way to visualize the parameters with the greatest influence on the study’s outcome using a tornado diagram. The tornado diagram arranges the impact of each one-way sensitivity analyses from largest (top) to smallest (bottom) on the chart (Figure 2).

Figure 2. Example tornado diagram.

You can perform multiple one-way sensitivity analysis and arrange them in a tornado diagram using Excel’s Visual Basic Application (VBA) macros. In this tutorial, you’ll be able to create a series of VBA macros to conduct one-way sensitivity analyses and visualize these as a tornado diagram.

MOTIVATING EXAMPLE

We will use a hypothetical decision tree model to perform a series of one-way sensitivity analyses. You can download the Excel file and high resolution images for this tutorial from the GitHub repository for Decision Tree Tutorials here. The example decision tree and its parameters are illustrated in Figure 3.

Figure 3. Decision tree and relevant parameters.

The base-case ICER comparing Drug A to Drug B is $5875 per one additional Cure achieved (Figure 4). The ICER is located in the Northeast quadrant.

Figure 4. Results from the decision tree and cost-effectiveness plane.

We will create a macro that will replace the Base-case value with the LL and UL values (lower limit and upper limit, respectively) for every parameter and re-calculate the ICER each time.

Here is what the final one-way sensitivity analysis table will look like after the macros are run (Figure 5). Notice how the Number column is not in ascending order. That’s because this is ordered by the Spread column, which is the absolute difference between the UL_ICER and LL_ICER. We sort by the Spread because it allows for the tornado diagram to place the parameter with the greatest influence on the base-case ICER at the top and the parameter with the least influence at the bottom.

Figure 5. Results from the one-way sensitivity analysis.

We will create three macros. The first macro will sort the Number column in ascending order. This helps with the one-way sensitivity analysis calculations. The second macro will perform the one-way sensitivity analyses by replacing the Value with the LL or UL values. The last macro will sort the Spread in descending order for the tornado diagram.

Note: In this tutorial, the tornado diagram is already generated and mapped to the data on the one-way sensitivity analysis table. I wrote a tutorial on how to create a tornado diagram, which can be accessed here.

To create and edit macros, you will need to make sure that the Developer tab is available on the ribbon panel (Figure 6).

Figure 6. Developer tab on Excel’s ribbon panel.

In Excel, go to the File > Options. In the Options window, select Customize Ribbon and check the box next to Developer in the Main Tabs column (Figure 7).

Figure 7. Enabling the Develop tab in the Options window.

Once the Develop tab is enabled, we can begin to examine the macros. To open the VBA editor, go to the Develop tab and click on Macros as seen in Figure 8. There are several macros that we can see under the Macro window. Select the oneway_order macro and click on Edit. This will open the VBA editor where we can examine the macro.

Figure 8. Open the VBA editor.

The VBA editor has several important features.

In the left panel are the various macros and their location. The right panel contains the VBA code for the macro. The macro controls allow you run (“Play”), pause, or stop the macro.

Figure 9. VBA editor interface.

Note: Normally, we save our Excel file using the *.xlsx extension. However, when you have an Excel macro, you need to save the file as an *.xlsm extension. This will allow you to save your macros and enable them later.

VBA Macro 1 – Sorting the one-way sensitivity analysis table

Let’s examine the first macro: oneway_order

The VBA code first selects the sheet, which is labeled as OWSA model. Within the sheet, it selects the area defined by AM7:AV15 which is the one-way sensitivity analysis table in the Excel spreadsheet. The next part of the code orders the one-way sensitivity analysis table in ascending order based on the values in the Number column which is defined as AM8:AM15.

Note: Since this is on the same worksheet, we can leave the label of the active sheet as OWSA model. But if you were using different worksheets, you need to make sure to change the active sheet’s name.

The second part of the code tells the VBA macro to sort according to columns and that the first row contains the headers or column labels.

Figure 10. VBA Macro 1 – sort by ascending order the one-way sensitivity analysis table.

VBA Macro 2 – Re-calculate the ICERs with the lower and upper limits for each parameter

The second macro performs the one-way sensitivity analysis, and it is labelled as oneway_macro. Let’s take a close look at the VBA code for this macro. Notice how there is a low and high component in this macro. This is due to the LL and UL on the parameters table in the decision tree model. The oneway_macro will replace the base-case values with the value from the LL and UL columns in the decision tree model. This will need to be repeated for each parameter. In this decision tree model, there are 8 parameters that we will perform the one-way sensitivity analyses on. For the VBA macro, we will call these 0 to 7.

Figure 11 provides a close inspection of the macro and its elements in relation to the decision tree model in Excel. Notice that this is a loop function. The loop will start at 0 and end at 7 iterations, which is a total of 8 iterations, the same number of parameters in the decision tree. In the VBA macro, this is initiated with For low = 0 to 7 and then repeats with Next low until it reaches 8 iterations.

By changing these parameters with the LL and UL values, the ICER will be re-calculated. Once recalculated, we can update the one-way sensitivity analysis table with the new ICER value. In the VBA macro the re-calculated ICER is in cell AI7, and its value is imputed into the one-way sensitivity analysis table starting at cell AT8.

One the VBA macro completes, all 8 parameters will have undergone the one-way sensitivity analyses, and the re-calculated ICERs would be imputed into the one-way sensitivity analysis table; the LL_ICER and UL_ICER columns will contain the re-calculated ICERs for the LL and UL values from the parameters table.

Figure 11. VBA macro to perform series of one-way sensitivity analyses.

VBA Macro 3 – Re-sort the one-way sensitivity analysis table using the “Spread” in descending order

Once the ICERs have been re-calculated using the LL and UL from the parameters table, we can estimate their influence by calculating the absolute difference between the UL_ICER and LL_ICER. We want the absolute difference because we’re interested in visualizing the difference with the low and high values from each parameter.

Here is what VBA Macro 3 looks like:

This is very similar to the VBA Macro 1, which orders the one-way sensitivity analysis table based on the Number column. The only difference is that instead of using the Number column, VBA Macro 3 will sort in descending order the one-way sensitivity analysis using the Spread column, which contains the absolute difference between UL_ICER and LL_ICER.

Let’s take a look at the VBA macro closely (Figure 12).

Figure 12. VBA Macro 3 – re-order the one-way sensitivity analysis table by “Spread.”

The macro first selects the one-way sensitivity analysis table (AM7:AV15). It then selects the Spread column to sort in descending order (AM8:AM15). Once sorted, the macro will end.

Note: You may notice that the Order:=xlAscending option may sound counter-intuitive since we are sorting by descending order. The reason why the option has this in xlAscending order is because the tornado diagram uses a reverse axis on the Format Axis option (see Figure 13).

Figure 13. Format axis option to have the categories in reverse order.

Assigning macros

Once the one-way sensitivity analysis table is completed, we can assign these macros to buttons on the worksheet.

We can create buttons using the Shapes function in Excel. Right-click on the shape and select Assign macro. Create three of these. Figure 14 illustrates the steps to assign macros to each button.

Figure 14. Assigning macros to buttons in Excel.

We will assign oneway_order macro to the first button (Figure 15).

Figure 15. VBA Marco 1 assigned to the “Step 1” button.

We do the same thing for VBA Macro 2 (Figure 16).

Figure 16. VBA Macro 2 assigned to the “Step 2” button.

Lastly, we assign the final VBA macro to the “Step 3” button (Figure 17).

Figure 17. VBA Macro 3 assigned to the “Step 3” button.

Final steps

One all the buttons have been assigned to a VBA macro, we can click on each one in order to repeat the steps of re-calculating the ICER and updating the one-way sensitivity analysis table.

We can also see the changes to the tornado diagram (Figure 18).

Figure 18. Final tornado diagram.

At this stage of the tutorial, it’s good practice to inspect your entire Excel file to ensure that no data was accidentally lost or erased during the creating of the macros. Sometimes, if we select the wrong cells, we can overwrite our data. It’s good practice to save different versions of the Excel file so that you can return to your previous work in case something goes wrong.

Conclusions

Excel provides a power tool to generate VBA macros to perform functions iteratively. We leveraged this tool to perform a series of one-way sensitivity analyses which we then used to create a tornado diagram. Since this tutorial used a single worksheet, the process is much easier to code and diagnose. However, as we expand and create more worksheets in our Excel file, we need to be careful to select the proper sheets when using these VBA macros.

Acknowledgements

I used templates of previous one-way sensitivity analyses to build these VBA macros in this tutorial. Admittedly, it has been years since I first began doing this in Excel, and I don’t recall the sources. However, I wanted to acknowledge them as they deserve all the credit for helping me understand and apply these tools to building pharmacoeconomic models. If I find out the sources of these macros, I will post them here. I do know that my courses at the University of Washington was a source for some of the VBA macros for constructing pharmacoeconomic models, and I wanted to acknowledge their work and materials.

References

You can download the Excel file(s) from the GitHub Repository on Decision Tree Tutorials (link).

Disclaimers

This is a work in progress, therefore, there may be updates in the future.

This is for educational purposes only.

Constructing a Markov model for cost-effectiveness analysis using Excel: A tutorial

I wrote a tutorial on how to construct a Markov model using Excel, which is available on my RPubs site (link). This was meant to complement a workshop that I am preparing for trainees interested in pharmacoeconomics.

The Markov model is a versatile mathematical model that allows researchers to simulate a chronic disease for many years. It is unique due to its features such as disease states which can contain the costs and benefits associated with them.

I posted files associated with this tutorial on my GitHub Markov Model Tutorial respository (link). These include some readings to provide sufficient background and the Excel file with the Markov model example. To properly download these files, make sure to go to the “Raw” file and right clich on the “Raw” option then “Save link as” onto your computer. There is a detailed explanation on the RPubs tutorial.

This is a work in progress, so expect some updates in the future.

Generating Survival Curves from Study Data: An Application for Markov Models (Part 2 of 2)

BACKGROUND

In a previous blog, we provided instructions on how to generate the Weibull curve parameters (λ and γ) from an existing Kaplan-Meier curve. The Weibull parameters will allow you to generate survival curves for cost-effectiveness analysis. In the second part of this tutorial, we will take you through the process of incorporating these Weibull parameters to simulate survival using a simple three-state Markov model. Finally, we’ll show how to extrapolate the survival curve to go beyond the time frame of the Kaplan-Meier curve so that you can perform cost-effectiveness analysis across a lifetime horizon.

In this tutorial, I will:

Describe how to incorporate the Weibull parameters into a Markov model

Compare the survival probability of the Markov model to the reference Kaplan-Meier curve to validate the method and catch any errors

Extrapolate the survival curve across a lifetime horizon

Link to the Markov model used in this tutorial can be found here.

MOTIVATING EXAMPLE

We will use a three-state Markov model to illustrate how to incorporate the Weibull parameters and generate a survival curve (Figure 1).

Figure 1. Markov model.

To simulate a Markov model using 40-time units (e.g., months), you will need to think about the different transition probabilities. Figure 2 lists the different transition probabilities and their calculations. We made the assumption that the transition from the Healthy state to the Sick state was 20% across all time points.

Figure 2. Transition probabilities for all health states and associated calculations.

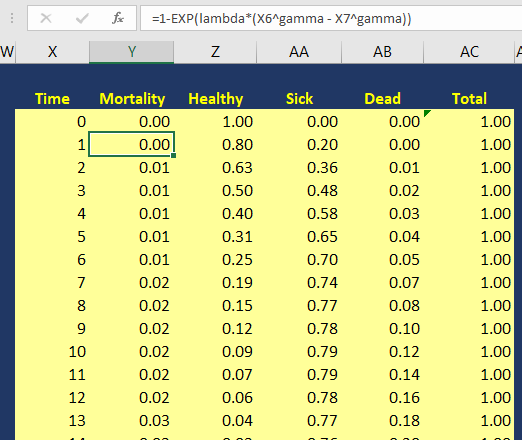

The variable pDeath(t) denotes the probability of mortality as a function of time. Since we have the lambda (λ=0.002433593) and gamma (γ=1.722273465) Weibull parameters, we can generate the Weibull curve using Excel. Figure 3 illustrates how I set up the Markov model in Excel. I used the following equation to estimate the pDeath(t):

where t_i is some time point at i. This expression can be written in Excel as (assuming T=1 and T+1 = 2):

= 1 – EXP(lambda*(T^gamma – (T+1)^gamma))

Figure 3. Calculating the probability of mortality as a function of time in Excel.

You can estimate the probability of survival as a function of time S(t) by subtracting pDeath(t) from 1. Once you have these values, you can compare how well your Markov model was able to simulate the survival compared to the observed Kaplan-Meier curve (Figure 4).

Figure 4. Survival curve comparison between the Markov model and Kaplan-Meier curve.

Once we are comfortable with the simulated survival curve, we can extrapolate the survival probability beyond the limits of the Kaplan-Meier curve. To do this, we will need to go beyond the reference Kaplan-Meier’s time period of 40 months. In Figure 5, I extended the time cycle (denoted as Time) from 40 to 100 (truncated at 59 months).

Figure 5 illustrates the Weibull distribution extrapolated out to an entire cohort’s life time in the Markov model (Figure 5 is truncated at 59 months to fit this into the tutorial).

Figure 6 provides an illustration of the lifetime survival of the cohort after extrapolating the time period from 40 months to 100 months. The survival curve does a relative good job of modeling the Kaplan-Meier curve. As the time period extends beyond 40 months, the Weibull curve will exponentially reach a point where all subjects will enter the Death state. This is reflected in the flat part of the Weibull curve at the late part of the time period.

Figure 6. Lifetime survival of the cohort using the Weibull extrapolation.

SUMMARY

After extrapolating the survival curve beyond the reference Kaplan-Meier curve limit of 40 months, you can estimate the lifetime horizon for a cohort of patients using a Markov model. This method is very useful when simulating chronic diseases. However, it is always good practice to calibrate your survival curves with the most recent data on the population of interest.

The U.S. National Center for Health Statistics has life tables that you can use to estimate the life expectancy of the general population, which you can compare to your simulated cohort. Moreover, if you want to compare your simulated cohort’s survival performance to a reference specific to your chronic disease cohort, you can search the literature for previously published registry data or epidemiology studies. Using existing studies as a reference will allow you to make adjustments to your survival curves that will give them credibility and validation to your cost-effectiveness analysis.

CONCLUSIONS

Using the Kaplan-Meier curves from published sources can help you to generate your own time-varying survival curves for use in a Markov model. Using the Hoyle and Henley’s Excel template to generate the survival probabilities, which are then used in an R script to generate the lambda and gamma parameters, provides a powerful tool to integrate Weibull parameters into a Markov model. Moreover, we can take advantage of the Weibull distribution to extrapolate the survival probability over the cohort’s lifetime giving us the ability to model lifetime horizons.

The Excel template developed by Hoyle and Henley generates other parameters that can be used in probabilistic sensitivity analysis like the Cholesky decomposition matrix, which will be discuss in a later blog.

REFERENCES

Location of Excel spreadsheet developed by Hoyle and Henley (Update 02/17/2019: I learned that Martin Hoyle is not hosting this on his Exeter site due to a recent change in his academic appointment. For those interested in getting access to the Excel spreadsheet used in this blog, please download it at this link).

Location of the Markov model used in this exercise is available in the following link:

https://www.dropbox.com/sh/ztbifx3841xzfw9/AAAby7qYLjGn8ZfbduJmAsVva?dl=0

Symmetry Solutions. “Engauge Digitizer—Convert Images into Useable Data.” Available at the following url: https://www.youtube.com/watch?v=EZTlyXZcRxI

Engauge Digitizer: Mark Mitchell, Baurzhan Muftakhidinov and Tobias Winchen et al, "Engauge Digitizer Software." Webpage: http://markummitchell.github.io/engauge-digitizer [Last Accessed: February 3, 2018].

Hoyle MW, Henley W. Improved curve fits to summary survival data: application to economic evaluation of health technologies. BMC Med Res Methodol 2011;11:139.

ACKNOWLEDGMENTS

I want to thank Solomon J. Lubinga for helping me with my first attempt to use Weibull curves in a cost-effectiveness analysis. His deep understanding and patient tutelage are characteristics that I aspire to. I also want to thank Elizabeth D. Brouwer for her comments and edits, which have improved the readability and flow of this blog. Additionally, I want to thank my doctoral dissertation chair, Beth Devine, for her edits and mentorship.

Generating Survival Curves from Study Data: An Application for Markov Models (Part 1 of 2)

BACKGROUND

In cost-effectiveness analysis (CEA), a life-time horizon is commonly used to simulate a chronic disease. Data for mortality are normally derived from survival curves or Kaplan-Meier curves published in clinical trials. However, these Kaplan-Meier curves may only provide survival data up to a few months to a few years. Extrapolation to a lifetime horizon is possible using a series of methods based on parametric survival models (e.g., Weibull, exponential); but performing these projections can be challenging without the appropriate data and software.

This blog provides a practical, step-by-step tutorial to estimate a parameter method (Weibull) from a survival function for use in CEA models. Specifically, I will describe how to:

Capture the coordinates of a published Kaplan-Meier curve and export the results into a *.CSV file

Estimate the survival function based on the coordinates from the previous step using a pre-built template

Generate a Weibull curve that closely resembles the survival function and whose parameters can be easily incorporated into a simple three-state Markov model

MOTIVATING EXAMPLE

We will use an example dataset from Stata’s data library. (You can use any published Kaplan-Meier curve. I use Stata's data library for convenience.) Open Stata and enter the following in the command line:

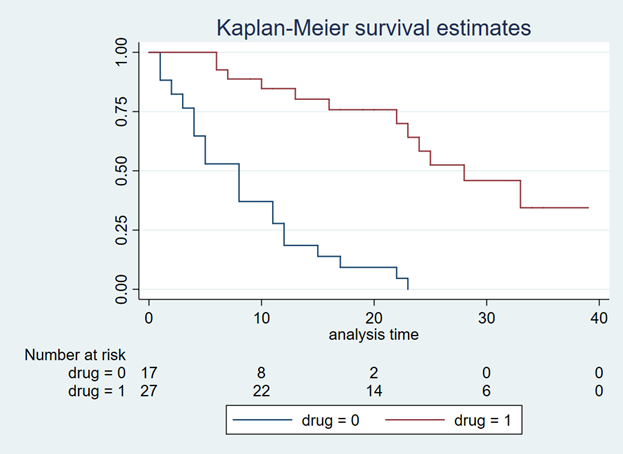

use http://www.stata-press.com/data/r15/drug2b sts graph, by(drug) risktable

You should get a Kaplan-Meier curve that illustrates the survival probability of two different drugs (Figure 1). The Y-axis denotes the survival probability and the X-axis denotes the time in months. Below the figure is the number at risk for the two drug comparators. We will need this to generate our Weibull curves. (If possible, find a Kaplan-Meier curve with the number at risk. It will make the Weibull curve generation easier.) Alternative methods exist to use Kaplan-Meier curves without the number at risk, but they will not be discussed in this tutorial.

Figure 1. Kaplan-Meier curve.

You will need to download the “Engauge Digitizer” application to convert this Kaplan-Meier curve into a *.CSV file with the appropriate data points. This will help you to develop an accurate survival curve based on the Kaplan-Meier curve. You can download the “Engauge Digitizer” application here: https://markummitchell.github.io/engauge-digitizer/

After you download “Engauge Digitizer,” open it and import the Kaplan-Meier file. Your interface should look like the following:

Figure 2. Engauge Digitizer interface.

The right panel guides you in digitizing your Kaplan-Meier figure. Follow this guide carefully. I will not go into how to use “Engauge Digitizer;” however a YouTube video tutorial to use Engauge Digitizer was developed by Symmetry Solutions and is available here.

We will use the top Kaplan-Meier curve (which is highlighted with blue crosshairs in Figure 3) to generate our Weibull curves.

Figure 3. Select the top curve to digitize.

After you digitize the figure, you will export the data as a *.CSV file. The *.CSV file should have two columns corresponding to the X- and Y-axes of the Kaplan-Meier figure. Figure 4 has the X values end at row 20 to fit onto the page, but this extends till the end of the Kaplan-Meier time period, which is 40.

Figure 4. *.CSV file generated from the Kaplan-Meier curve (truncated to fit onto this page).

I usually superimpose the “Engauge Digitizer” results with the actual Kaplan-Meier figure to prove to myself (and others) that the curves are exactly the same (Figure 5). This is a good practice to convince yourself that your digitized data properly reflects the Kaplan-Meier curve from the study.

Figure 5. Kaplan-Meier curve superimposed on top of the Engauge Digitizer curve.

Now, that we have the digitized version of the Kaplan-Meier, we need to format the data to import into the Weibull curve generator. Hoyle and Henley wrote a paper that explains their methods for using the results from the digitizer to generate Weibull curves.[1] We will use the Excel template they developed in order to generate the relevant Weibull curve parameters. (The link to the Excel template is provided at the end of this tutorial.)

I always format the data to match the Excel template developed by Hoyle and Henley. The blue box indicates the number at risk at the time points denoted by Figure 1 and the red box highlights the evenly spaced time intervals that I estimated (Figure 6).

Figure 6. Setting up your data using the template from Hoyle and Henley.

In order to find the survival probability at each “Start time” listed in the Excel template by Hoyle and Henley, linear interpolation is used. [You can use other methods to estimate the survival probability between each time points given the data on Figure 3 (e.g., last observation carried forward); however, I prefer to use linear interpolation.] In Figure 7, the survival probabilities (Y) correspond to a time (X) that was generated by the digitizer. Now, we want to find the Y value corresponding to the X values on the Excel template.

Figure 7. Generating the Y-values using linear interpolation.

Figure 8 illustrates how we apply the linear interpolation to estimate the Y value that corresponds with the X values from the Excel template developed by Hoyle and Henley. For example, if you were interested in finding the Y value at X = 10, the you would need to input the following into the linear interpolation equation using the following expression:

This yields a Y value of 0.866993, which is approximately 0.87.

Figure 8. Y values are generated using linear interpolation.

After generating the Y values corresponding to the Start time from Figure 5, you can enter them into the Excel template by Hoyle and Henley (Figure 9). Figure 9 illustrates the inputted survival probabilities into the Excel template.

Figure 9. Survival probabilities are entered after estimating them from linear interpolation.

After the “Empirical survival probability S(t)” is populated, you will need to go to the “R Data” worksheet in the Excel template and save this data as a *.CSV file. In this example, I saved the data as “example_data.csv” (Figure 10).

Figure 10. Data is saved as “example_data.csv.”

Then I used the following R code to estimate the Weibull parameters. This R code is located in the “Curve fitting ‘R’ code” in the Excel templated developed by Hoyle and Henley. (I modified the R code written by Hoyle and Henley to allow for a *.CSV file import.)

rm(list=ls(all=TRUE))

library(survival)

# Step 4. Update directory name and text file name in line below

setwd("insert the folder path where the data is stored")

data<- read.csv("example_data.csv")

attach(data)

data

times_start <-c( rep(start_time_censor, n_censors), rep(start_time_event, n_events) )

times_end <-c( rep(end_time_censor, n_censors), rep(end_time_event, n_events) )

# adding times for patients at risk at last time point

######code does not apply because 0 at risk at last time point

######code does not apply because 0 at risk at last time point

# Step 5. choose one of these function forms (WEIBULL was chosen for the example)

model <- survreg(Surv(times_start, times_end, type="interval2")~1, dist="exponential") # Exponential function, interval censoring

model <- survreg(Surv(times_start, times_end, type="interval2")~1, dist="weibull") # Weibull function, interval censoring

model <- survreg(Surv(times_start, times_end, type="interval2")~1, dist="logistic") # Logistic function, interval censoring

model <- survreg(Surv(times_start, times_end, type="interval2")~1, dist="lognormal") # Lognormal function, interval censoring

model <- survreg(Surv(times_start, times_end, type="interval2")~1, dist="loglogistic") # Loglogistic function, interval censoring

# Compare AIC values

n_patients <- sum(n_events) + sum(n_censors)

-2*summary(model)$loglik[1] + 1*2 # AIC for exponential distribution

-2*summary(model)$loglik[1] + 1*log(n_patients) # BIC exponential

-2*summary(model)$loglik[1] + 2*2 # AIC for 2-parameter distributions

-2*summary(model)$loglik[1] + 2*log(n_patients) # BIC for 2-parameter distributions

intercept <- summary(model)$table[1] # intercept parameter

log_scale <- summary(model)$table[2] # log scale parameter

# output for the example of the Weibull distribution

lambda <- 1/ (exp(intercept))^ (1/exp(log_scale)) # l for Weibull, where S(t) = exp(-lt^g)

gamma <- 1/exp(log_scale) # g for Weibull, where S(t) = exp(-lt^g)

(1/lambda)^(1/gamma) * gamma(1+1/gamma) # mean time for Weibull distrubtion

# For the Probabilistic Sensitivity Analysis, we need the Cholesky matrix, which captures the variance and covariance of parameters

t(chol(summary(model)$var)) # Cholesky matrix

# Simulate variability of mean for Weibull

library(MASS)

simulations <- 10000 # number of simulations for standard deviation of mean

sim_parameters <- mvrnorm(n=simulations, summary(model)$table[,1], summary(model)$var ) # simulates simulations from multivariate normal

intercepts <- sim_parameters[,1] # intercept parameters

log_scales <- sim_parameters[,2] # log scale parameters

lambdas <- 1/ (exp(intercepts))^ (1/exp(log_scales)) # l for Weibull, where S(t) = exp(-lt^g)

gammas <- 1/exp(log_scales) # g for Weibull, where S(t) = exp(-lt^g)

means <- (1/lambdas)^(1/gammas) * gamma(1+1/gammas) # mean times for Weibull distrubtion

sd(means) # standard deviation of mean survival

# consider adding this (from Arman Oct 2016) to plot KM

km <- survfit(Surv(times_start, times_end, type="interval2")~ 1)

summary(km)

plot(km, xmax=600, xlab="Time (Days)", ylab="Survival Probability")

There are several elements generated by the above R code that you need to record, including the intercept and log-scale:

> intercept [1] 3.494443 > log_scale [1] -0.5436452

Once you have this, input them into the Excel template sheet titled “Number events & censored,” which is the same sheet you used to generate the survival probabilities after entering the data from the “Engauge Digitizer.” Figure 11 illustrates where these parameters are entered (red square).

Figure 11. Enter the intercept and log scale parameters into the Excel template developed by Hoyle and Henley.

You can check the fit of the Weibull curve to the observed Kaplan-Meier curve in the tab “Kaplan-Meier.” Figure 12 illustrates the Weibull fit’s approximation of the observed Kaplan-Meier curve.

Figure 12. Weibull fit (red curve) of the observed Kaplan-Meier curve (blue line).

From Figure 11, we also have the lambda (λ=0.002433593) and gamma (γ=1.722273465) parameters which we’ll use to simulate survival using a Markov model.

SUMMARY

In the next blog, we will discuss how to use the Weibull parameters to generate a survival curve using a Markov model. Additionally, we will learn how to extrapolate the survival curve beyond the time period used to generate the Weibull parameters for cost-effectiveness studies that use a lifetime horizon.

REFERENCES

Location of Excel spreadsheet developed by Hoyle and Henley (Update 02/17/2019: I learned that Martin Hoyle is not hosting this on his Exeter site due to a recent change in his academic appointment. For those interested in getting access to the Excel spreadsheet used in this blog, please download it at this link).

Update: 12 January 2023 - The old link to the YouTube video on Engauge was broken. A new link was identified that provided the same content on how to use Engauge.

Location of the Markov model used in this exercise is available in the following link:

https://www.dropbox.com/sh/ztbifx3841xzfw9/AAAby7qYLjGn8ZfbduJmAsVva?dl=0

Design with Greg. “Engauge: A Free Tool for Engineering that pairs great with Excel.” Available at the following url: https://www.youtube.com/watch?v=i1bEFovvvbM

Engauge Digitizer: Mark Mitchell, Baurzhan Muftakhidinov and Tobias Winchen et al, "Engauge Digitizer Software." Webpage: http://markummitchell.github.io/engauge-digitizer [Last Accessed: February 3, 2018].

Hoyle MW, Henley W. Improved curve fits to summary survival data: application to economic evaluation of health technologies. BMC Med Res Methodol 2011;11:139.

ACKNOWLEDGMENTS

I want to thank Solomon J. Lubinga for helping me with my first attempt to use Weibull curves in a cost-effectiveness analysis. His deep understanding and patient tutelage are characteristics that I aspire to. I also want to thank Elizabeth D. Brouwer for her comments and edits, which have improved the readability and flow of this blog. Additionally, I want to thank my doctoral dissertation chair, Beth Devine, for her edits and mentorship.