Background

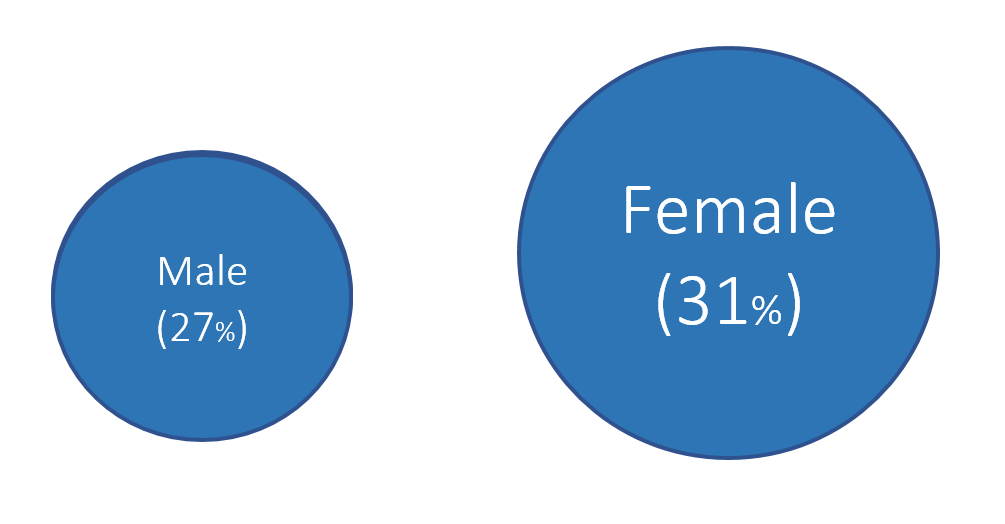

As we decide the type of chart we want to visualize our data with, we should also think about our color scheme. Color should represent the data value. We should be able to see a chart, identify the color that interests us and associate a value with it. However, it is easy to misidentify the data that the color is supposed to represent and it is also easy to confuse the magnitude of the difference between groups.

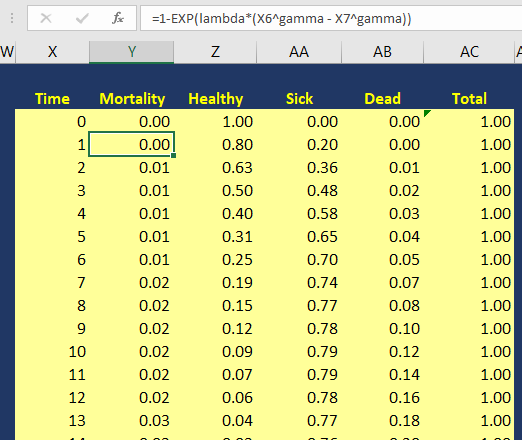

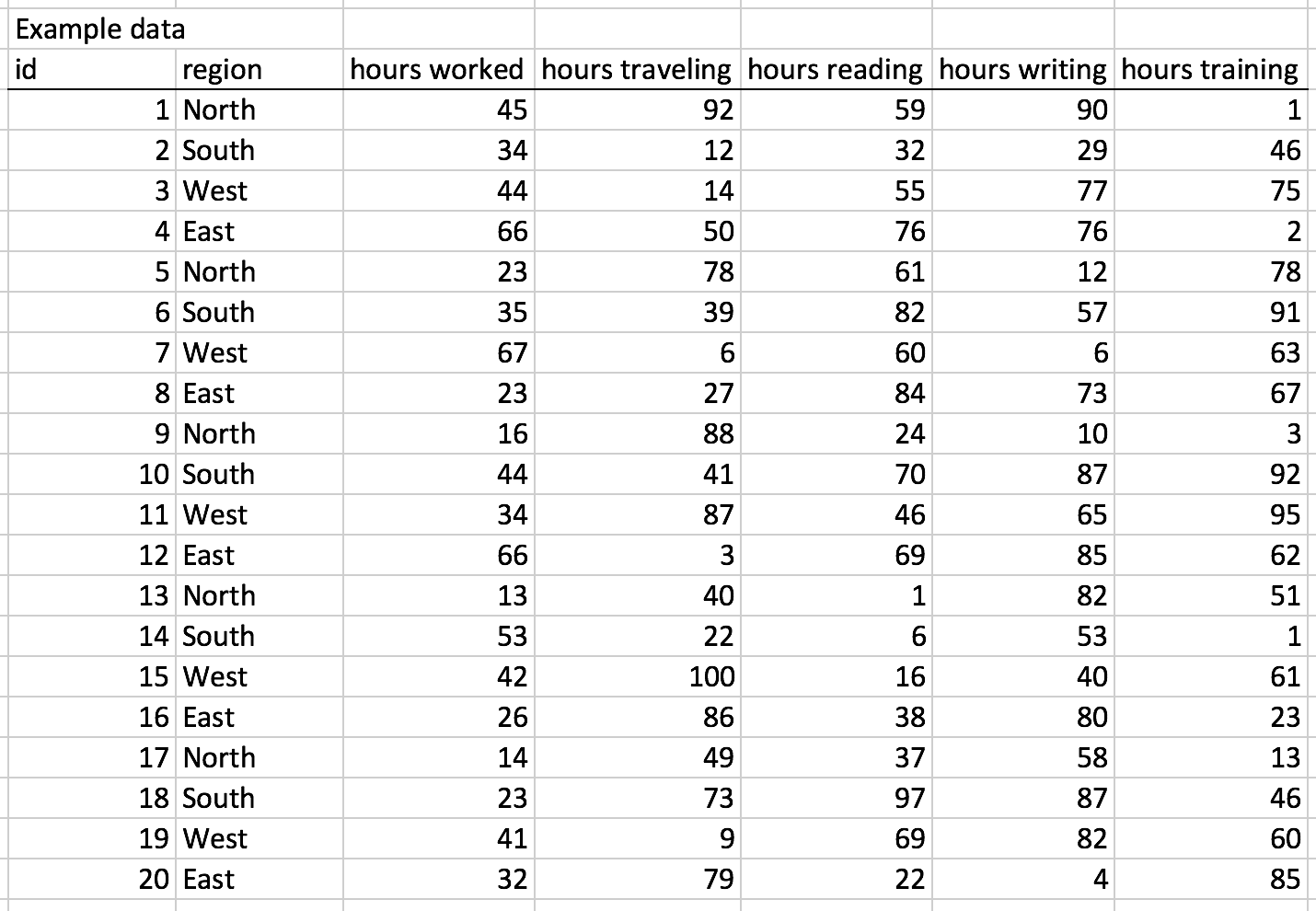

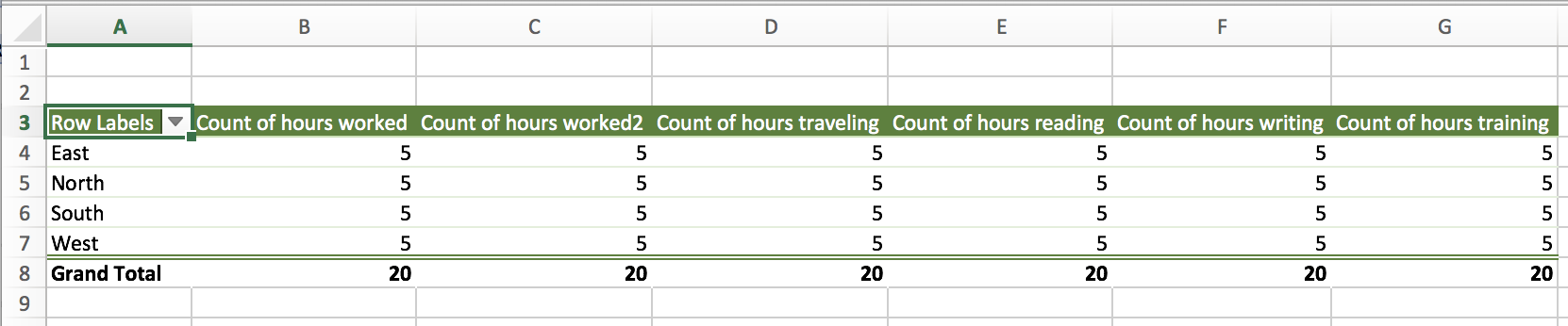

In this tutorial, we will discuss some basic elements of color theory that will help you to select the best color palette for your graphical presentations. We will use the following simple data and color schemes to illustrate some of these lessons (Figure 1). The X column denotes some arbitrary category that uses the alphabet and the Y column denotes the values associated with each category.

Figure 1. Example data used to illustrate the different color schemes.

Figure 2. Color schemes used in this example (single-hue color and rainbow color schemes).

Bias-Precision Tradeoff

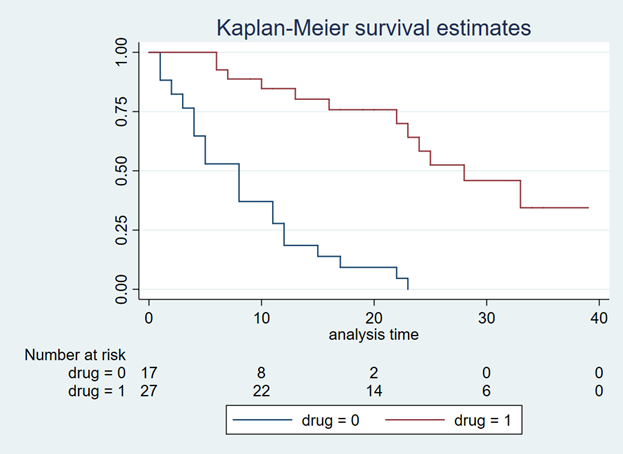

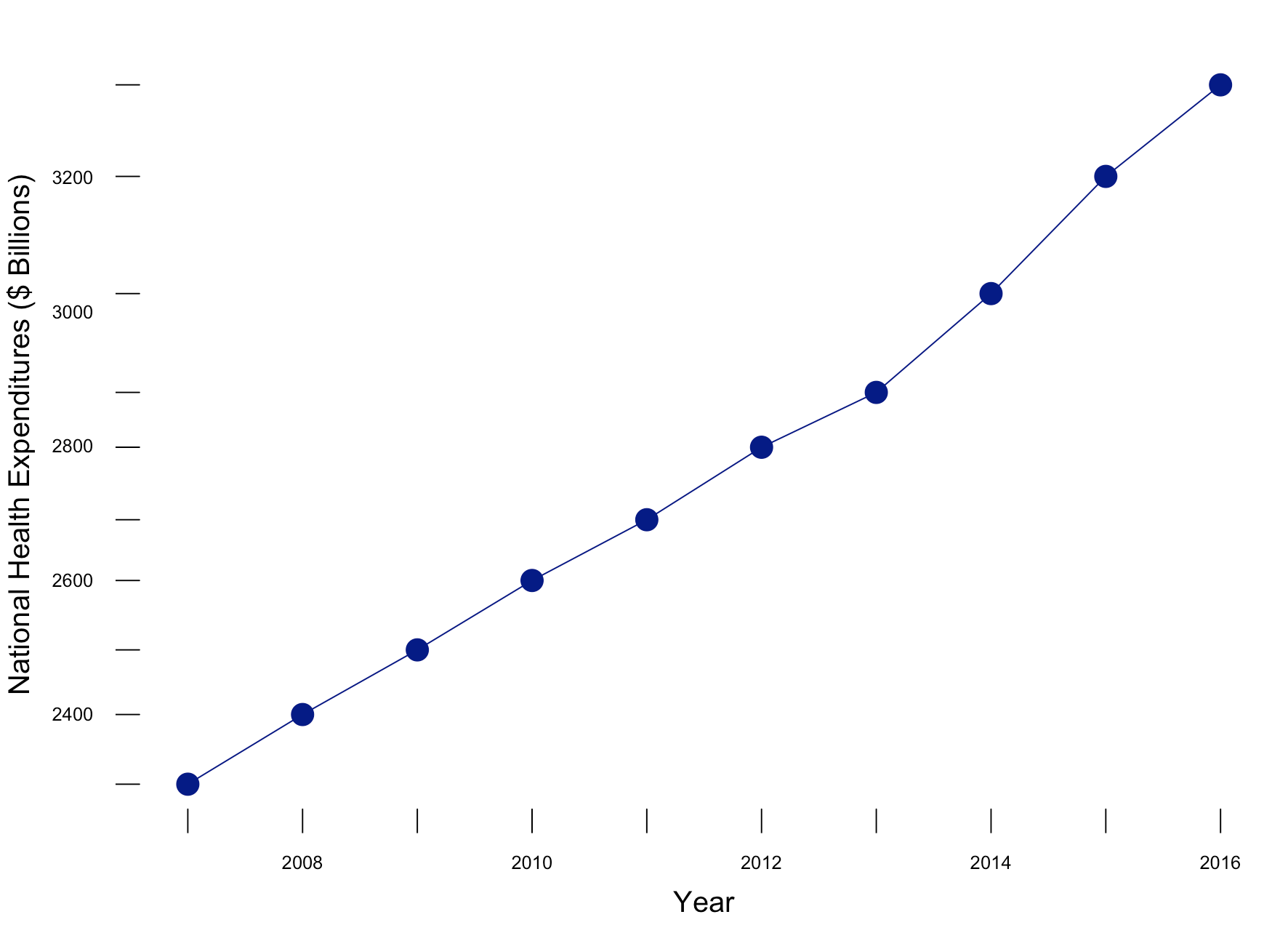

There is always a tradeoff between bias and precision. Bias is associated with the ability to accurately identify the data value associated with the color. Precision is associated with the ability to correctly identify the data with little variation. Figure 3 illustrates the differences between the two.

Figure 3. Bias and Precision tradeoff.

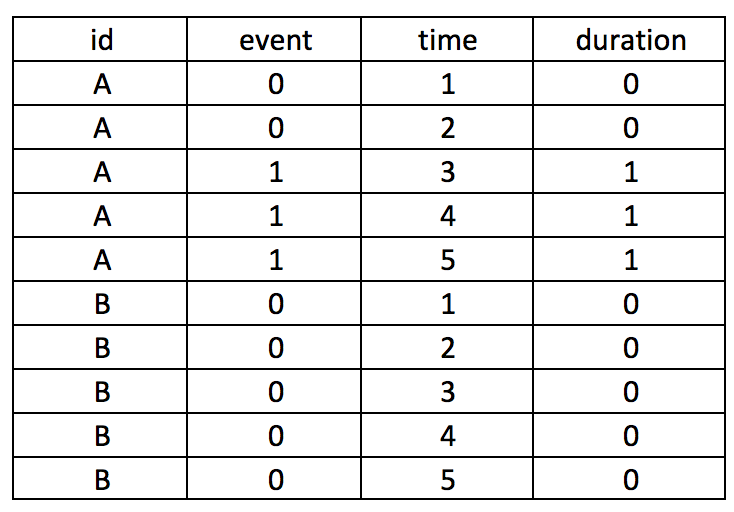

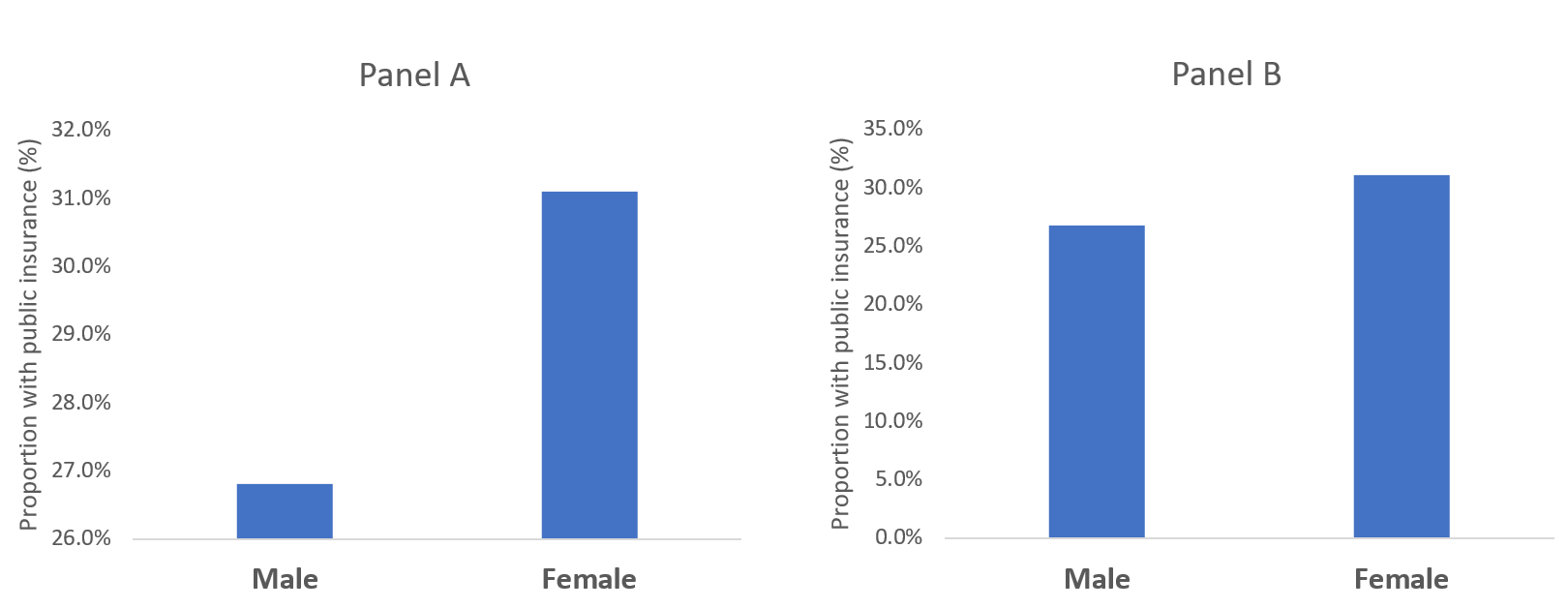

For example, if you had a single-hue progression color scheme (blue), it is easy to identify the color that contains the higher value versus the color that contains the lower value. In Figure 4A, the darker shade of blue is associated with a higher value compared to the lighter shade of blue. However, it may not be easy when you have a rainbow color scheme because the values can be arbitrarily associated with a different color (Figure 4B). In other words, Figure 4A helps to reduce the bias while Figure 4B can generate a lot of bias.

Figure 4. Comparison between single hue color scheme and rainbow color scheme.

Additionally, Figure 4B is easier to distinguish between the groups. You can easily identify Group F compared to Group E because the colors are distinctly differentiable. However, in Figure 4A, it is very difficult to distinguish between Group F and Group E because the shades of blue associated with both groups appear similar. Therefore, the ability to precisely detect the differences in the groups is limited by the single-hue color scheme (Figure 4A) compared to the rainbow color scheme (Figure 4B).

Another way to look at these principles is to think about the concurrence between the true value and the estimated value. Suppose you were given these color schemes and asked to estimate the correct value. How do you think you’ll do? Would it be easier to estimate the true value from the single-hue color scheme or the rainbow color scheme?

Figure 5. Performance of color schemes according to estimated and true values.

I generated data in Figure 5 to illustrate the principles in this tutorial. Figure 5 compares performance between the single-hue color scheme and rainbow color scheme. Figure 5A has low bias but some scatter (moderate precision) across the gray line, which denotes the 1:1 accuracy between true and estimated values. However, Figure 5B has high bias but very little scatter (high precision) due to the easy identification of the groups. Whereas, there was some uncertainty in correctly identifying the true value within each group.

Summary

When deciding on the color scheme for your data, take into consideration what is more important. Is it critical that your audience precisely distinguish one group from another? Or is it more important to have them visually identify the correct value associated with the color? Ideally, you want to be able to use a color scheme that has low bias and high precision, but in reality, you will need to make a tradeoff between the two.

References

I used the following references to develop this tutorial.

Liu Y, Heer J. Somewhere over the rainbow: An empirical assessment of quantitative colormaps. ACM Human Factors in Computing Systems (CHI) 2018, April 21-26, 2018, Montreal, QC, Canada. http://dx.doi.org/10.1145/3173574.3174172

Cromwell W. Colour schemes in data visualisation: Bias and Precision. Presented at the useR! 2016 international R User conference, June 15, 2017. URL: https://channel9.msdn.com/Events/useR-international-R-User-conference/useR2016/Colour-schemes-in-data-visualisation-Bias-and-Precision